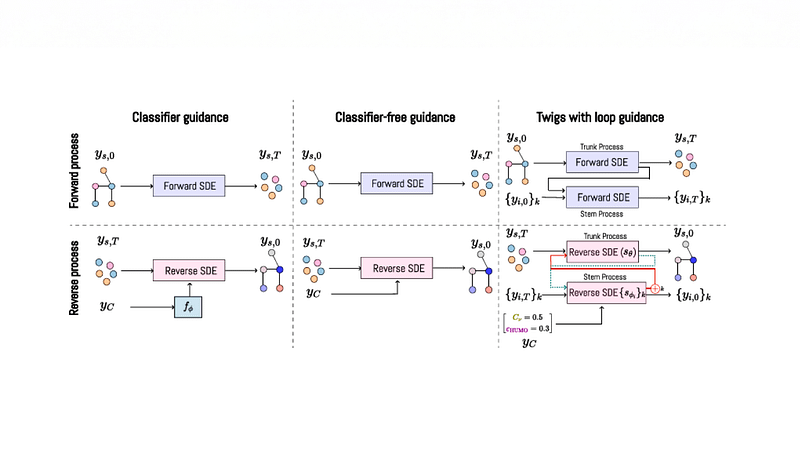

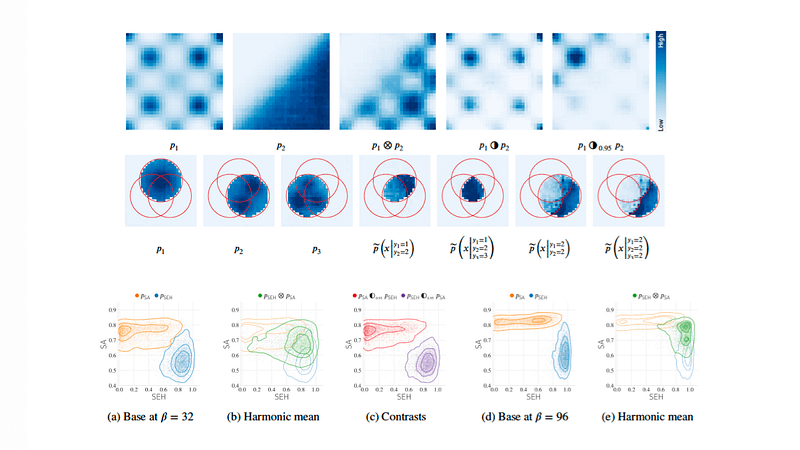

Massive costs involved in training large generative models has necessitated model reuse and composition to achieve the desired flexibility. In a fruitful collaboration with Massachusetts Institute of Technology (MIT), we show how advanced generative techniques such as Diffusion models and GFlowNets can be composed in a principled manner to go beyond what can be achieved by the individual pretrained models. Our approach paves way for several promising opportunities as we empirically validate our method on image and molecular generation tasks. Work published in NeurIPS 2023.

How do we benefit from pretrained generative AI models? Composition!

Innovations

Successful Business Evolution through AI & Quantum Tech

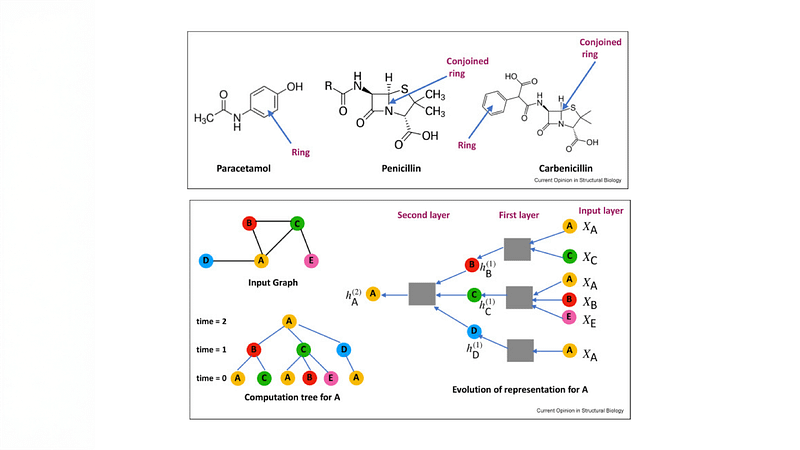

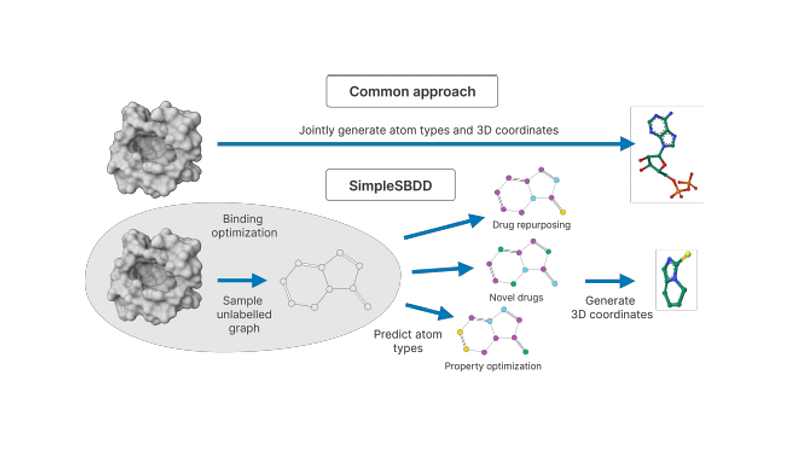

Discovering new promising molecule candidates that could translate into effective...

State-of-the-art approaches for structure-based drug design (SBDD) use extremely complex...

Unlocking novel generative capabilities with diffusion via “loop guidance”! In...